背景

维护Presto集群,遇到了一些JVM Bug会严重导致Presto查询变慢,这里分享下Bugs表现及解决方法。

Ref Proc 耗时太久

线上Presto运行一段时间后,查询会越来越慢,打了下火焰图,发现30%的CPU都浪费在young gc上了,看了下gc log,如下:

1 |

|

从上面我们可以看到 Ref Proc: 501.3 ms,总共young gc耗时0.57s,而Ref Proc耗时就达到了0.5s。我们需要看下Ref Proc耗时主要在哪个地方。

我们通过配置gc参数-XX:+PrintReferenceGC,可以看到详细的Reference GC时间,并且如下图Case,JNI Weak Reference是主要瓶颈点。

1 | 2021-02-07T09:17:42.299+0800: 3865450.117: [GC pause (G1 Evacuation Pause) (mixed)2021-02-07T09:17:42.394+0800: 3865450.212: [SoftReference, 0 refs, 0.0020337 secs]2021-02-07T09:17:42.396+0800: 3865450.214: [WeakReference, 75 refs, 0.0014819 secs]2021-02-07T09:17:42.397+0800: 3865450.215: [FinalReference, 1447 refs, 0.0012562 secs]2021-02-07T09:17:42.398+0800: 3865450.217: [PhantomReference, 0 refs, 70 refs, 0.0021257 secs]2021-02-07T09:17:42.401+0800: 3865450.219: [JNI Weak Reference, 0.1966801 secs], 0.3046075 secs] |

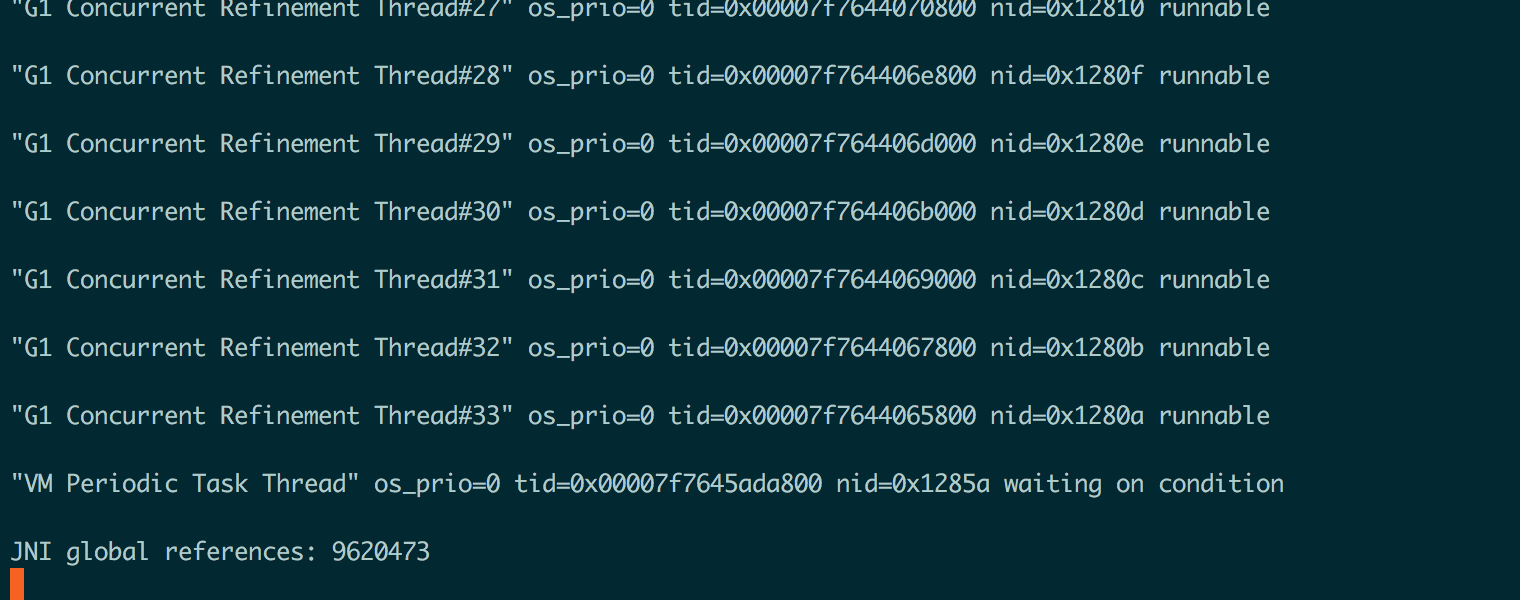

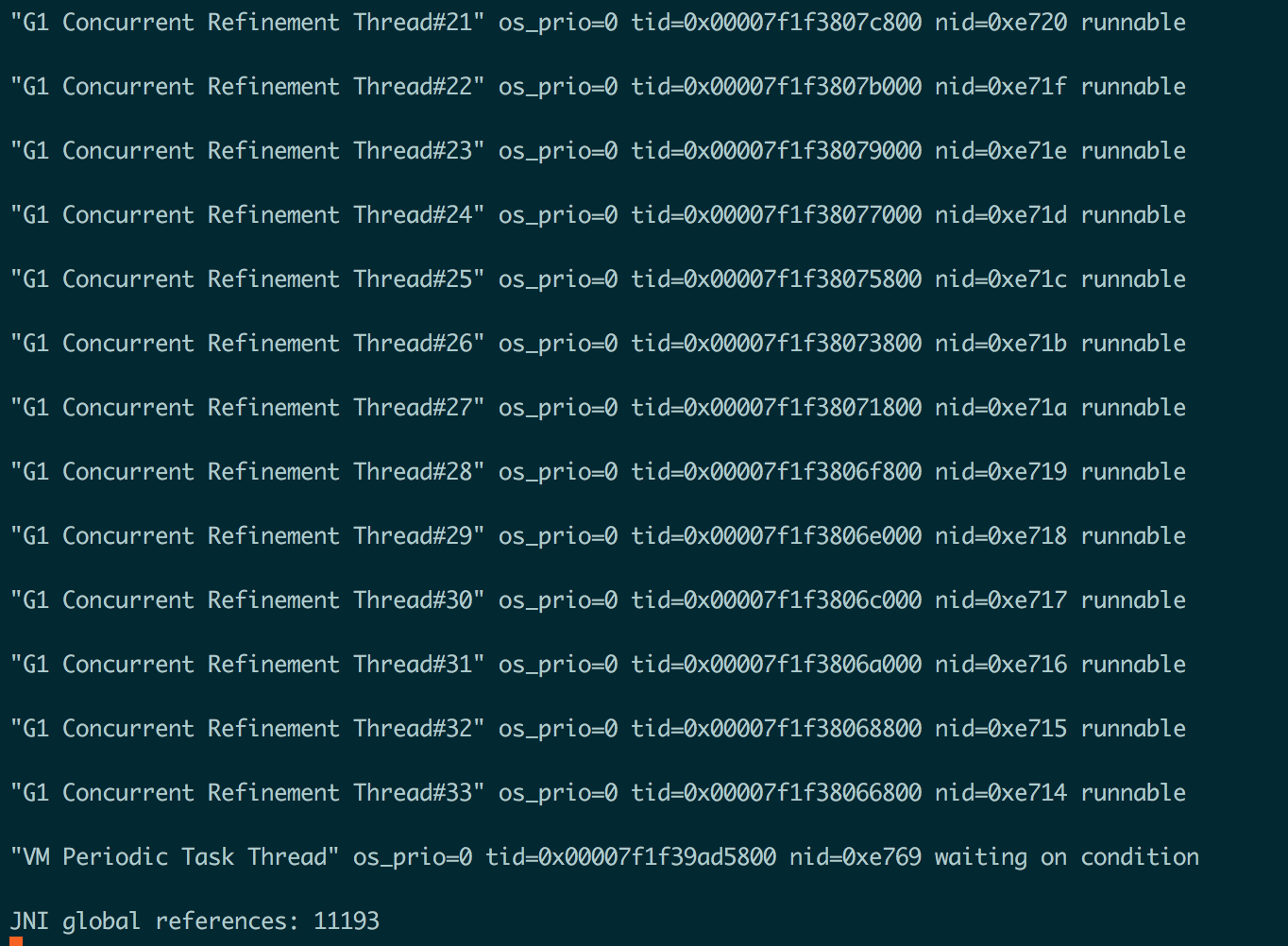

我们需要看下为啥JNI Weak Reference耗时这么久,jstack pid 看下,发现jni global references达到了960W个。

持续观察Presto,发现JNI global references会持续增长,打印了几天的数据,这个值就不会降低,一直增长,然后会导致young gc越来越慢,手动触发full gc也不会降低,几周后,可能young gc就会占用cpu 30%以上时间了。

解决方法

升级到jvm最新版本,jdk8我升级到jdk1.8.0_271版本,持续跑了三周后,jstack查看:

jvm逆优化

这个说过几次了,并且很多搞Presto的朋友也遇到或咨询过这个问题,详细见:https://mp.weixin.qq.com/s/OK39zkUj-kJfY6HYixj_Lw

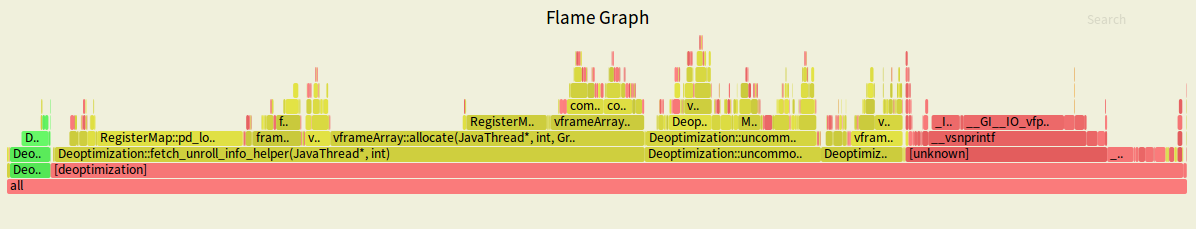

这个Bug分为两阶段,第一阶段就是低版本的jdk 8 触发逆优化后,会触发Deoptimization::Action_none,啥事不干,无限循环的状态,新版本jdk 8修复了这个问题,但是依然会导致性能变慢,打个火焰图,如下图所示:

如果你们火焰图发现程序都在做Deoptimization,那表示遇到了逆优化问题,会严重影响性能。

解决方法

修改JVM参数:

1 | -XX:ReservedCodeCacheSize=512M |

young gc Termination耗时太久

1 | 2021-01-29T22:10:48.109+0800: 341.173: [GC pause (GCLocker Initiated GC) (young)2021-01-29T22:10:50.843+0800: 343.907: [SoftReference, 0 refs, 0.0000531 secs]2021-01-29T22:10:50.843+0800: 343.907: [WeakReference, 11 refs, 0.0000136 secs]2021-01-29T22:10:50.843+0800: 343.907: [FinalReference, 9 refs, 0.0000572 secs]2021-01-29T22:10:50.844+0800: 343.907: [PhantomReference, 75 refs, 154 refs, 0.0000679 secs]2021-01-29T22:10:50.844+0800: 343.907: [JNI Weak Reference, 0.0001989 secs], 2.7381984 secs] |

由上面的gc log可以看到Termination耗时2555.8ms,Termination Attempts达到了19585次。

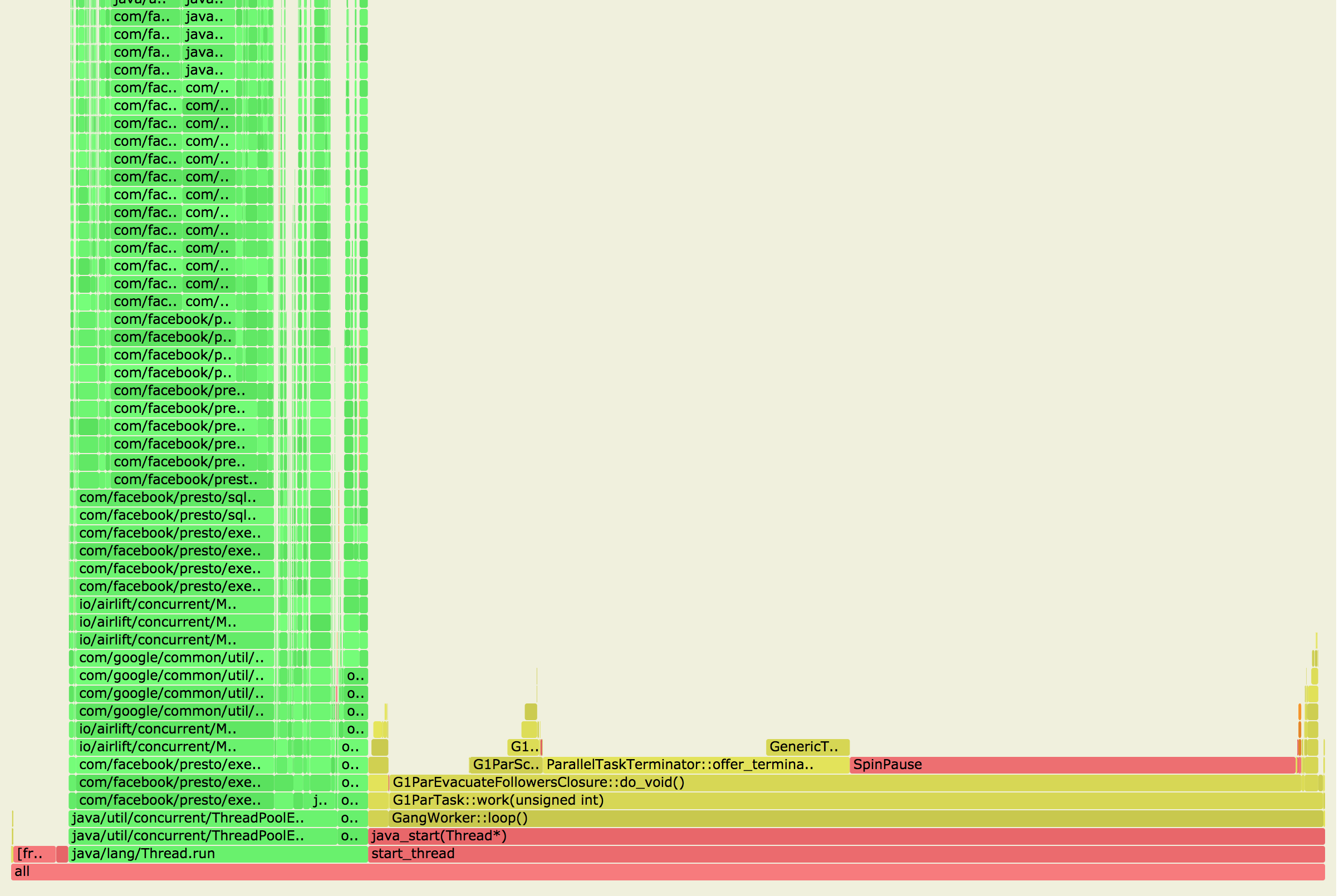

打个火焰图,如下图所示:

可以看到young gc在做offer_termination,并且会有大约30%的CPU浪费在自旋锁(SpinPause)里。

这个问题非常棘手,我们尝试过cms、g1、zgc(jdk 11,会很慢,但是瓶颈不在Termination里了),以及jdk 8、jdk 11都没有解决。后来找了专门搞jvm的同事询问了下,Termination这块逻辑,cms及g1是一样的,如果一个遇到,那这2个gc算法都会遇到,可以尝试jdk 15的zgc,看下是否能解决。

但是这个问题一旦遇到,重启也恢复不了,为了解决这个问题,我们又尝试了阿里的开源jdk: dragonwell8,发现问题解决,应该是dragonwell新加了一个参数,默认开启。

1 | -XX:+UseOWSTTaskTerminator |

原理有点像是针对大对象,不要多work去偷任务,偷了也只能忙等。

同时,我发现腾讯也应该遇到了类似问题,开源的Kona 8 JDK也解决了类似问题,如下面文档所示:

GC 并行线程通过 Work Stealing 进行负载均衡,减少 GC 停顿时间。原有 Work Stealing 算法中所有空闲 GC 线程都会频繁尝试 work stealing,造成 CPU 资源浪费,新算法采用一个 master 线程监控是否进行 work stealing 并在适当时机唤醒其它空闲 GC 线程,极大减少 CPU 资源浪费和进程间的 CPU 资源竞争。OWST 在 OpenJDK 社区版本12以后支持,大数据 Hibench 测试有最多30%的性能提升,平均提升8%。但是我测试后,发现文中提到的参数是不存在的,并且现象依然复现。

解决方法

总结下这个问题的解决方法,这个问题,目前社区还没有修复,可以试下阿里开源的dragonwell,如果不行,需要升级到JDK 15,使用zgc再尝试下。

总结

在遇到性能问题,重要的是定位性能变慢的原因,是引擎问题还是JVM问题等等,如果是JVM问题,又需要定位哪个阶段导致的问题,遇到bug也尽量去openjdk社区看下是否已经修复了这个问题,同时建议多perf看下性能瓶颈点。这里只介绍了三个常见的会严重影响Presto性能的jvm bug,而新的PrestoSQL在使用过程中,还有一些公司会遇到codegen导致性能变慢的问题,我们目前还没有遇到。同时,由于Presto新版本一般会使用很多jvm新的特性,在编译Presto时候也会遇到很多jvm bug,我在从PrestoDB升级PrestoSQL时候,其中有一天遇到了5次JVM编译Bug,这里建议各位如果遇到jvm问题,尽量升级到最新jvm版本,避免踩坑。